Courses

-

Master Program (8)

Master Program courses

-

Data Science (5)

-

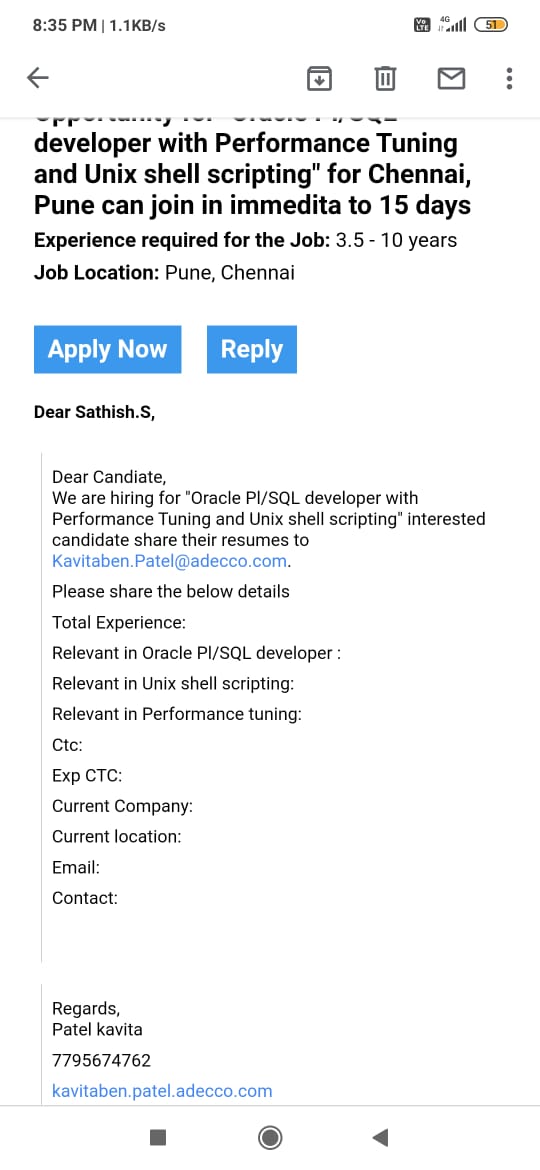

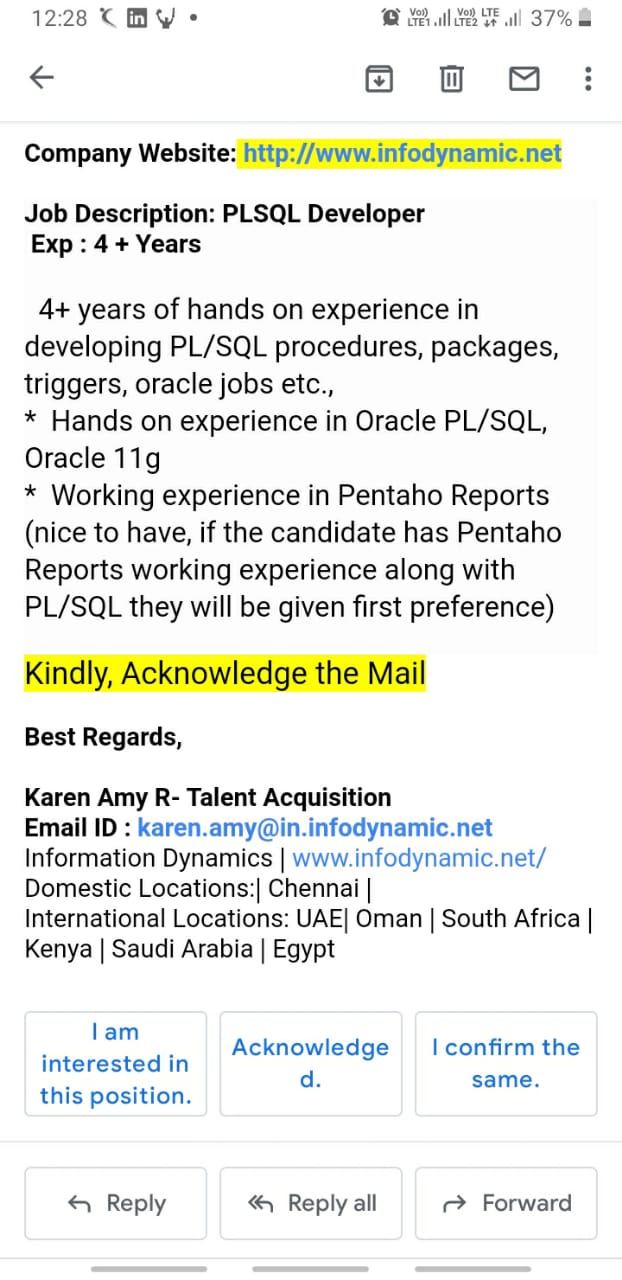

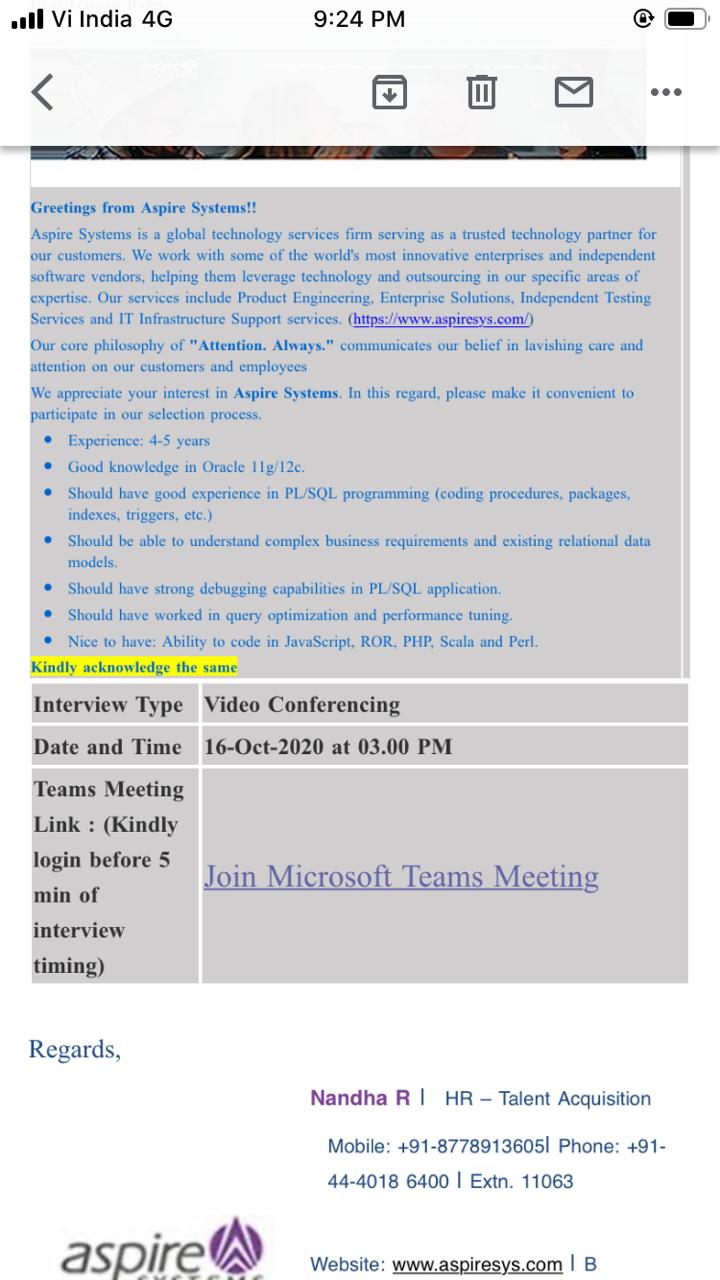

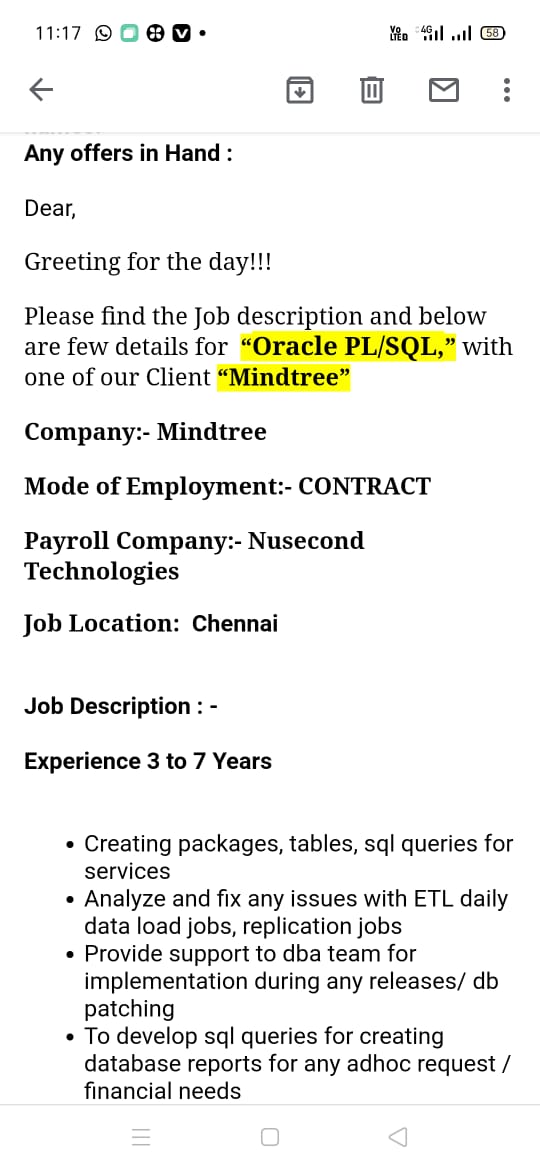

Oracle (48)

Oracle courses

-

RPA (6)

-

Dot Net (8)

-

Java (4)

-

Software Testing (12)

-

Data Warehousing (5)

-

MSBI (8)

-

Programming (9)

-

Other (5)

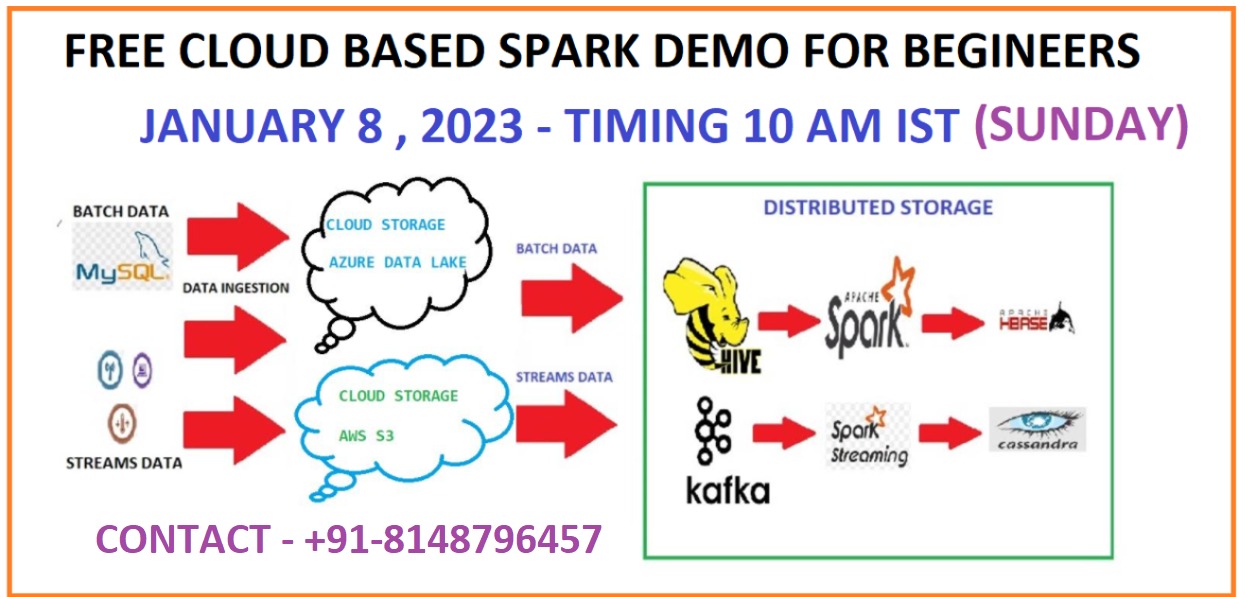

In this hands-on Hadoop Big Data training course, you will execute real-life, industry-based projects using Integrated Lab.

This is an industry-recognized Big Data certification training course that is a combination of the training courses in Hadoop developer, Hadoop administrator, Hadoop Tester and

analytics using Apache Spark.

In this hands-on Hadoop Big Data training course, you will execute real-life, industry-based projects using Integrated Lab.

This is an industry-recognized Big Data certification training course that is a combination of the training courses in Hadoop developer, Hadoop administrator, Hadoop Tester and

analytics using Apache Spark.